Overview

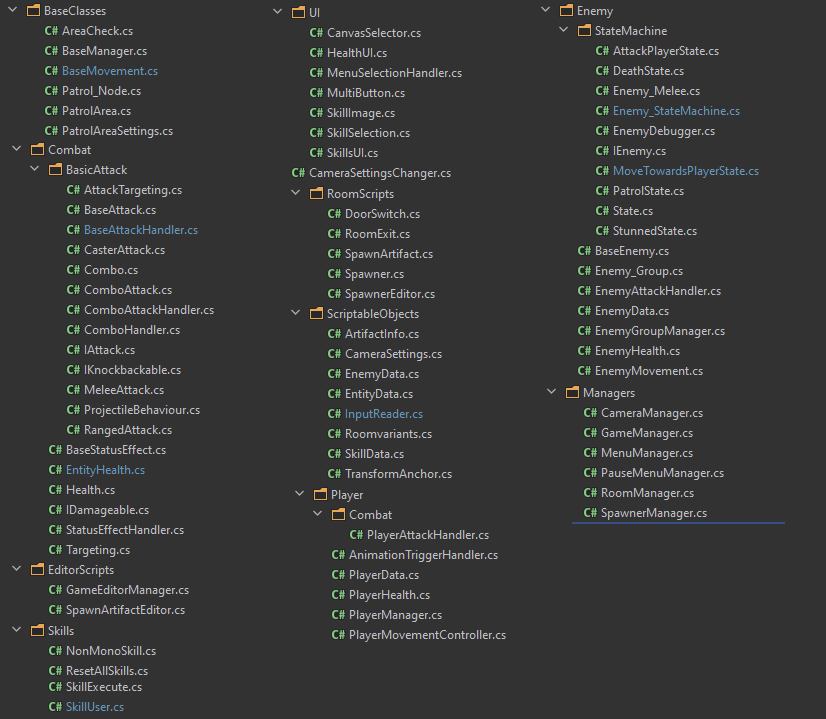

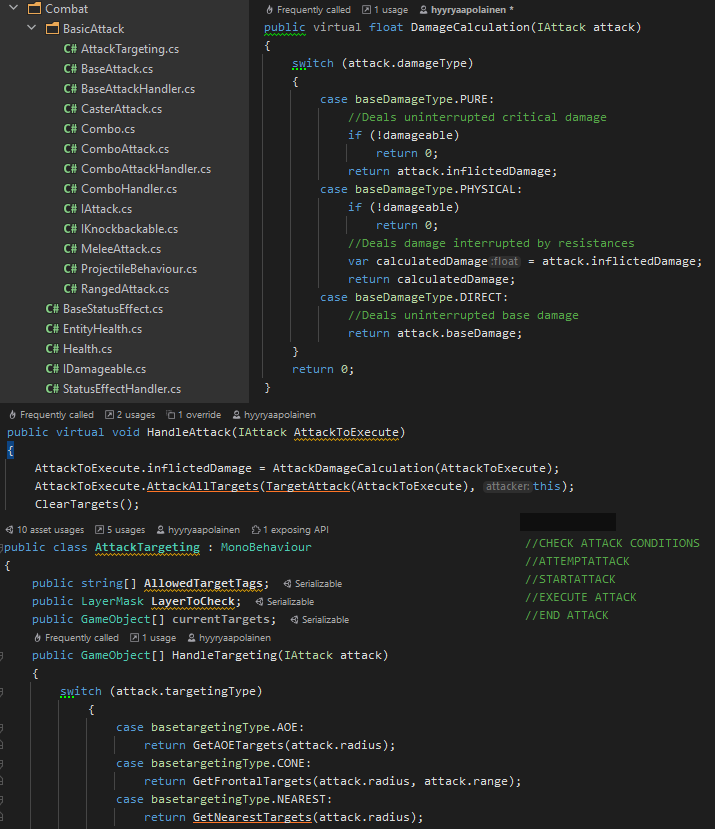

I implemented our combat system according to a combat mechanic diagram provided to me by the content producers.

The goal was to make combat fluid with simple comboes, animation cancelling mechanics, utilize multiple weapons, critical hits and have status effects that affect entities.

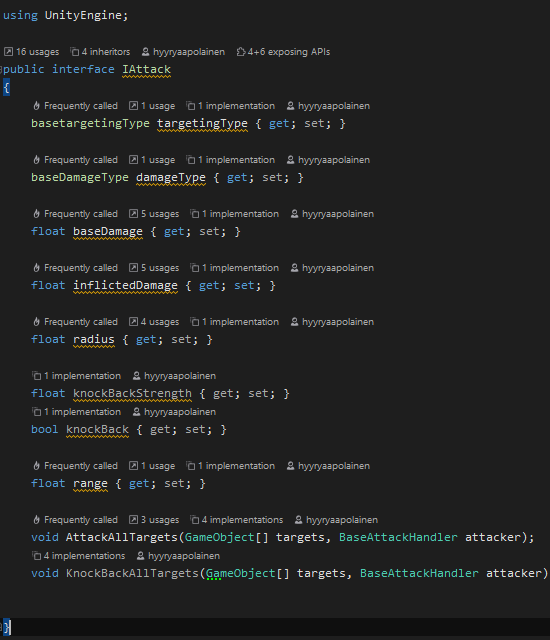

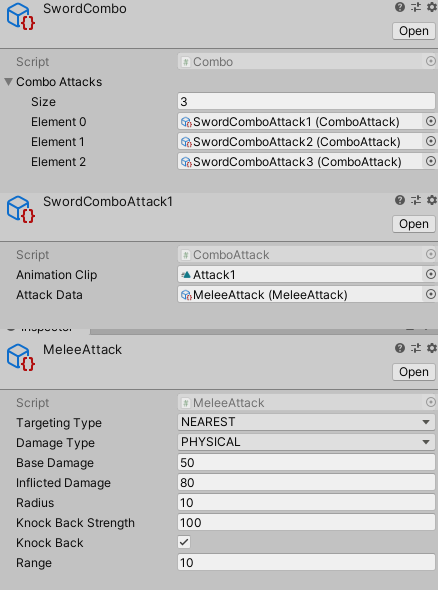

I used Unity's scriptable objects to create combo attacks that can each be given their own animation and attack parameters. I also experimented with creating an interface for the attack.

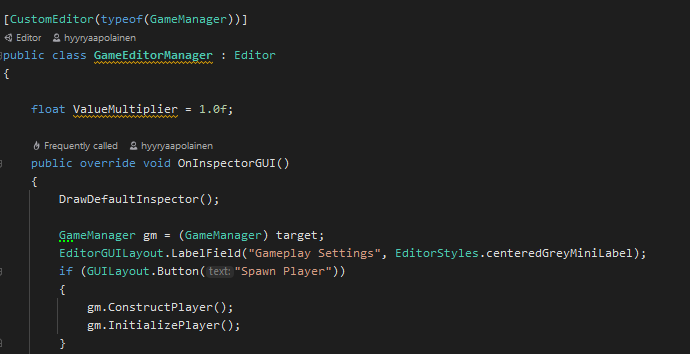

Game Designers can choose from a multitude of options to easily create different attacks to create interesting comboes.

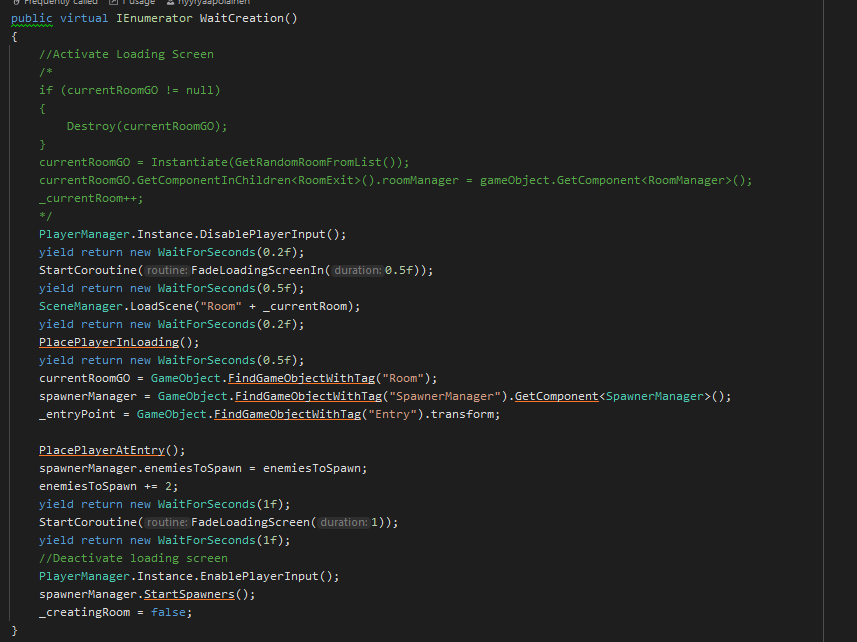

Attack handling will then use the combo pieces provided to execute attacks in sequence.

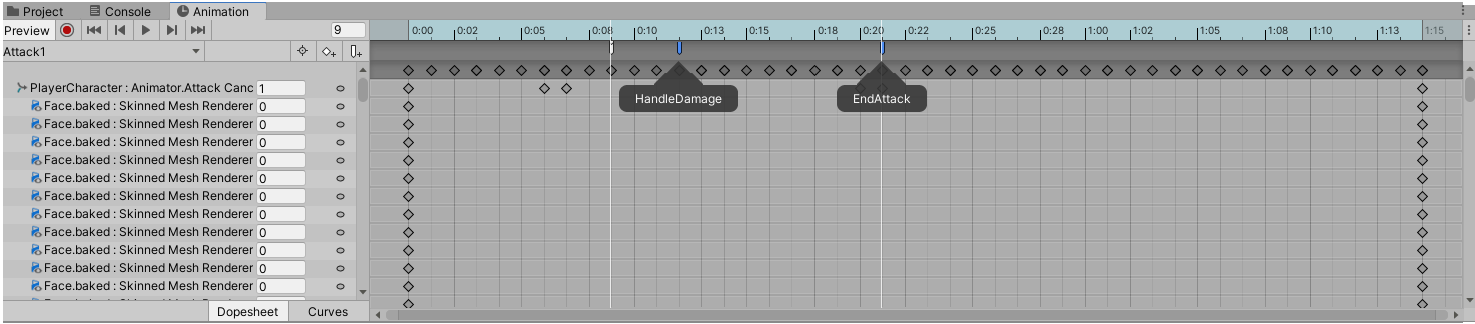

Animation cancelling is done inside the animator via checking the attack phase, which can be done with animation frame precision.

Attack Interface

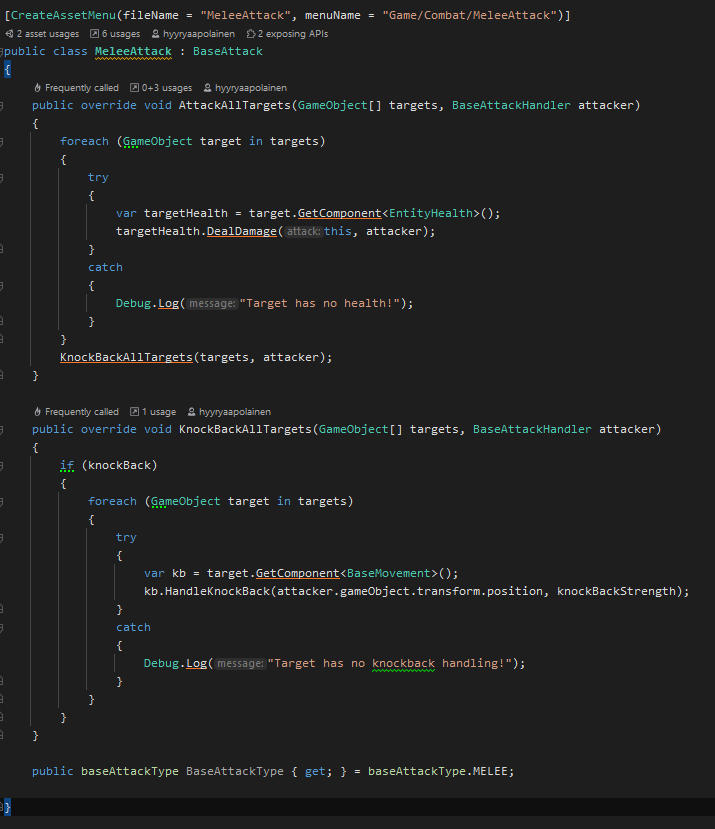

Melee Attack

Attack Inspector

Attack Logic

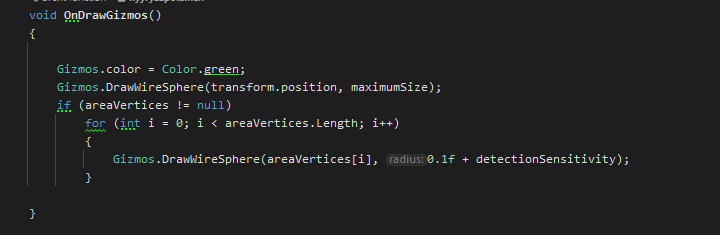

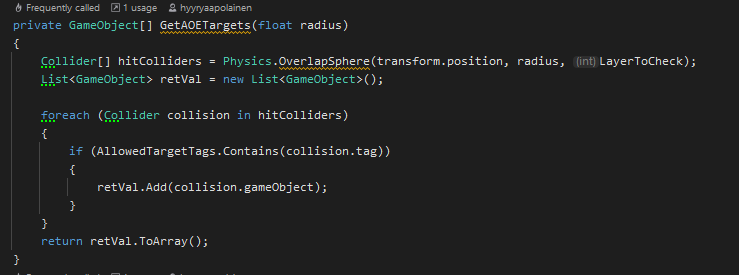

AoE Targeting

Player Animator