Overview

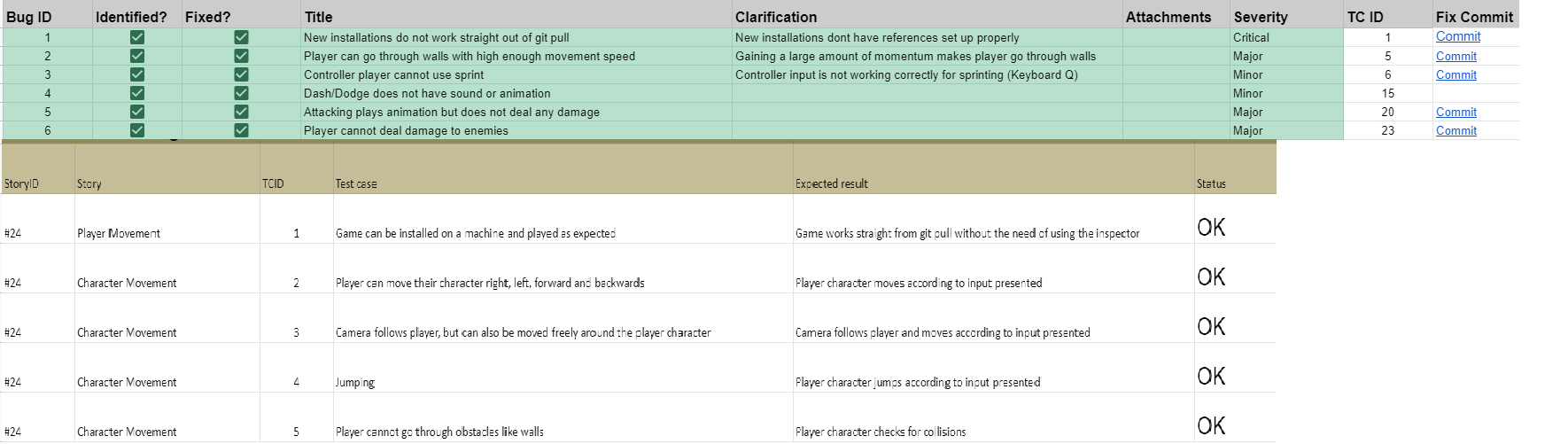

Our High Level Test Plan had a biweekly stability test where test cases would be evaluated based on their implementation in-game.

We held internal concept discussions that had the goal of fleshing out the vision to every member of the team

We held 2 public concept tests. The 1st one had individual playtesters come in and record their gameplay session as well as give feedback on their experience.

The 2nd was a blind test where the testers were tasked with giving feedback via a questionnaire form-

I then compiled the results from each test held in a Sprint and discussed the results with the team to produce action points we could tackle during the next sprint.

.png)

Internal Test 1

.png)

Internal Test 1

.png)

Concept Test 2

.png)

Concept Test 2

.png)

Concept Test 2

Bug reporting